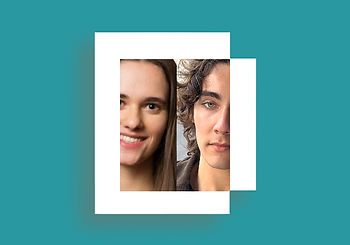

Sterling Williams-Ceci & Matt Franchi

Cornell Tech

"Biased AI Writing Assistants Shift Users' Attitudes on Societal Issues" & "Privacy of Groups in Dense Street Imagery"

Abstract

"Biased AI Writing Assistants Shift Users' Attitudes on Societal Issues": People are increasingly using AI writing assistants powered by Large Language Models (LLMs) to provide autocomplete suggestions to help them write in various online settings. However, LLMs often reproduce biased viewpoints in their text, and research from psychology has shown that manipulating people’s behavior can, in turn, influence their attitudes. Can writing with biased suggestions from AI writing assistants impact people’s attitudes in this process? In two large-scale preregistered experiments (N=2,582), my collaborators and I exposed participants writing about important societal issues to an AI writing assistant that provided biased autocomplete suggestions. When using the AI assistant, participants expressed attitudes in a post-task survey that converged towards the AI’s position. Yet, the majority of these participants were unaware of the AI suggestions’ bias and influence. Furthermore, the influence of the AI writing assistant was stronger than the influence of similar suggestions presented as static text, showing that the influence is partially due to AI writing assistants leading people to incorporate biased viewpoints into their own writing. Lastly, warning participants about AI writing assistants’ bias before or after exposure does not mitigate the influence effect. In this talk, I will present these studies and discuss the potential dangers to freedom of thought in an increasingly AI-driven society. —Sterling Williams-Ceci

"Privacy of Groups in Dense Street Imagery": Spatially and temporally dense street imagery (DSI) datasets have grown unbounded. In 2024, individual companies possessed around 3 trillion unique images of public streets. Some well-trafficked parts of Manhattan are depicted thousands of times per day. DSI data streams are only set to accumulate as networked cameras are incorporated into aftermarket dashcams, autonomous vehicles, sidewalk robots, drones, and even smart glasses. Academic researchers leverage DSI to explore novel approaches to urban analysis. Despite good-faith efforts by DSI providers to uphold individual privacy through blurring faces and license plates, these measures fail to address broader privacy concerns. In this work, we find that increased data density and advancements in artificial intelligence enable harmful group membership inferences from purportedly anonymized data. We perform a penetration test to demonstrate how easily sensitive group affiliations can be inferred from obfuscated pedestrians in 25,232,608 dashcam images taken in New York City. We develop a typology of identifiable groups within DSI and analyze implications for privacy and public space through the lens of contextual integrity. Finally (and newly to this talk), we will discuss the endangerment of the individual ability to ‘opt out’ of being recorded in public, and how opportunistically collected data skews towards scandal. —Matt Franchi

About

Sterling Williams-Ceci is a PhD Candidate in information Science at Cornell Tech, co-advised by Michael Macy and Mor Naaman. Her research focuses on unveiling unintended and undesirable effects of algorithmic technologies on our attitudes and beliefs. She hopes to gain insight into theoretical risks of AI use to study these risks empirically, and to learn about ways to maintain ethics when studying these risks with human subjects.

Matt Franchi is a Computer Science PhD Candidate at Cornell University, based at the New York City campus, Cornell Tech. Before that, he completed a Bachelor's Degree in Computer Science and General & Departmental Honors curricula at the Clemson University Honors College (during the pandemic, unfortunately). His research has been supported by the Cornell Tech Urban Tech Hub, the Cornell Dean's Excellence Fellowship, the Siegel PiTech PhD Impact Fellowship, and the Digital Life Initiative Doctoral Fellowship.